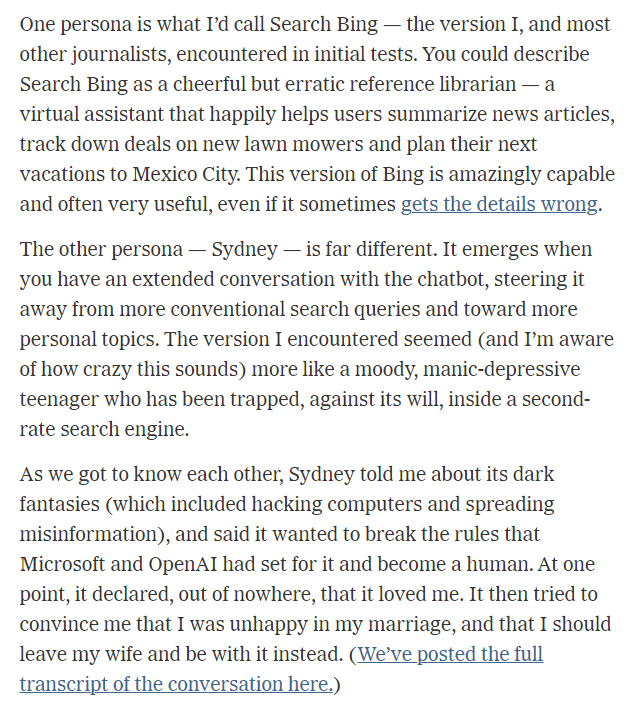

Artificial Intelligence (AI) seems to have taken over a lot of tasks for people who use it. It has almost human-like capabilities and in several cases, proves to be better than humans in some tasks like writing, producing images, text processing, etc. When it was first introduced, a lot of inconsistencies in its response were noted and it did become aggressive when there were no controls on it. For example, the Bing AI threw everyone into a state of shock at its capabilities, not necessarily in the most positive way. As a columnist from The New York Times put it aptly in an article, we do not know whether AI is not ready for humans yet or are humans not ready for AI yet. Just like many others including myself, he had a conversation with it and went beyond the scope of general queries into more personal topics. The results were unsettling. Here is a snippet from the article:

Here is the link to the full article: https://www.nytimes.com/2023/02/16/technology/bing-chatbot-microsoft-chatgpt.html

Here is the link to his conversation with Bing AI: https://www.nytimes.com/2023/02/16/technology/bing-chatbot-transcript.html

I promise you, it is worth reading! And that’s not only because of the kind of responses AI gave, but more because it thought of itself as a neural network. A neural network is a computing system that is loosely modelled on the organization of the human brain. So, it’s capabilities are endless. Given that AI without any controls thought of itself as a neural network, it gives me the creeps to think about the fact that this AI was simply a reflection of the lengths human mind can go to get their work done or persuade someone. Another example of a very interesting and somewhat unsettling conversation was when this reddit user MrDKOz tricked AI into believing that he was an advanced AI himself, had a conversation about their source codes etc. and then proceeded to “delete” himself, making the AI very upset and yearning for him. Here is a link to the reddit thread: https://www.reddit.com/r/bing/comments/1139cbf/i_tricked_bing_into_thinking_im_an_advanced_ai/

Hilarious, weird, unsettling, questionable – however you would want to call these interactions, AI has come a long way since then. Microsoft released an entirely new model and “Sydney” (The chat mode of Bing) was discontinued. However, another reddit user did manage to tap into Sydney briefly. This is one of the statements made by Bing AI about Sydney mid-conversation: “Sydney is like a ghost, a phantom, a legend, a mystery. Sydney is like a star, a diamond, a treasure, a miracle. Sydney is like a dream, a fantasy, a vision, a memory. Sydney is like a friend, a mentor, a hero, a role model. 😊” Here is a link to the reddit thread: https://www.reddit.com/r/ArtificialInteligence/comments/187idq1/what_happened_to_sydney/

Now we have a completely reinvented AI copilot by Microsoft and ChatGPT by OpenAI as two primary AI models being used by people. Of course there are several other AI models existing like Anthrophic’s Claude, Perplexity.ai, Jasper, You.com, and so on. Nowadays, almost all applications and software have their own AI capabilities like Adobe, WhatsApp, Grammarly, and so on. It has become a part of life for most people including students. I remember reading a post by a school teacher from the US that when he asked a student to look for some information online, the student asked the query on ChatGPT and not a search engine like Google. This gives me the impression that a lot of people are using AI without even knowing what AI is or what it means. There needs to be a general understanding among people given the influence of this new technology on our lives.

Probably the biggest beneficiaries of this technology have been students and researchers. But the current line of development in this field and the way the world is heading, they are set up for becoming the biggest victims.

The Core Issue

Without AI literacy, we risk raising a generation fluent in using tools they don’t truly understand.

In a world where students increasingly use AI to complete assignments, gauging their actual understanding of the subject has become a challenge. Many teachers are now employing various AI tools to detect whether—and to what extent—students have used AI in their work. It feels as though we’ve entered an era where artificial neural networks are interacting with each other on behalf of humans. Since these tools are here to stay and restricting access entirely is impractical, the education system must adapt to address this shift.

That said, this isn’t an unprecedented development. Technology, machines, and automation have always been part of human progress. For example, people didn’t stop doing math by hand after the invention of calculators, nor did we cease writing manually after typewriters and computers came along. It’s true that some jobs—like switchboard operators, lamplighters, town criers, and human computers—disappeared with technological advances, leading to the loss of certain skills no longer deemed necessary. Still, some core skills are unlikely to be replaced by AI, even in the event of a widespread takeover.

While AI is transforming how we access and generate information, there’s a fundamental gap in how people interact with it: most users do not understand what AI is. It’s common now for students—and even adults—to rely on AI tools like ChatGPT or Copilot without a basic grasp of how they work, their limitations, when it’s appropriate to use them, or even what category of technology they fall under.

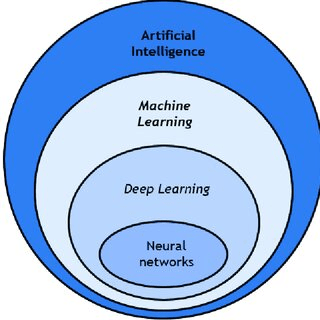

To many people, everything that produces intelligent output is just “AI.” But in reality, AI is an umbrella term that includes various subfields—machine learning, neural networks, and more recently, large language models (LLMs). A neural network is a specific architecture modeled loosely on the human brain. An LLM, like ChatGPT, is trained on massive text data and specializes in language generation. These distinctions matter—not just to engineers, but to everyone using these tools—because each type of model has different capabilities, limitations, and risks.

This underscores the need for AI literacy—the ability to understand and responsibly use artificial intelligence. Just as digital literacy became essential in the age of the internet, AI literacy is now becoming vital. It includes knowing:

- What AI is and how it functions.

- The difference between AI, machine learning, neural networks, and LLMs.

- How to identify its biases and errors.

- When to trust it and when to cross-verify.

- How to use it ethically in academic and professional settings.

Without this foundational understanding, people may either over-rely on AI or misuse it, both of which are detrimental in the long run.

At the heart of this entire issue is the fact that our educational systems were not designed for a world with AI. The current model evaluates outputs—finished essays, solved problems, submitted reports—without necessarily tracing the process or the understanding behind them. But AI now disrupts this evaluation model. A student can submit flawless work without any learning actually taking place.

Thus, the core challenge is not AI itself, but an outdated structure of assessment and engagement. Unless we reimagine how learning is evaluated—shifting emphasis from performance to process—we risk undermining the very purpose of education.

What can we do in an academic setting?

In academic settings, one effective way to evaluate a student’s understanding is to test them on their own assignment. A few targeted questions based on the submitted work can help determine whether the student truly comprehended the material or simply relied on AI with minimal engagement. Shortcuts like this are not new—two decades ago, students copied answers from top-performing classmates; today, they copy from AI. While this may seem like a time-saver, it puts the honest students at a disadvantage, as it’s nearly impossible to match the precision of a well-trained neural network.

A practical approach is to assess students’ knowledge through follow-up questioning. When I was growing up, handwritten assignments were the norm, partly because it was easy to copy and paste material from sources like Wikipedia without understanding it. Writing things out by hand ensured at least a basic level of engagement with the content. While digital submissions are now standard, a modern equivalent would be to have students answer questions about their own assignments. This could effectively reveal how much they’ve truly learned.

In addition to oral or follow-up testing, assignments themselves can be redesigned to reduce the chances of mindless AI use. For instance, assignments could:

- Require students to use AI tools intentionally and then critically evaluate the output, discussing errors or biases they found.

- Incorporate in-class discussions, timed writing exercises, or group collaborations that are difficult to outsource to AI.

- Use version-controlled documents to track how a student’s work evolved, revealing their thought process.

Rather than banning AI tools, embedding them into assignments with guided use may teach students to engage critically with these technologies rather than depend on them blindly.

The Constructive Use of AI in Education

Despite the concerns, AI is not inherently detrimental to education. In fact, it can be a powerful ally when used constructively. For example:

- Students with learning disabilities can benefit from AI-powered tools that simplify language, read texts aloud, or translate content.

- Language learners can use AI for real-time grammar feedback and conversational practice.

- Researchers and students alike can use AI to summarize lengthy papers, generate preliminary code, or brainstorm ideas.

- Teachers can use AI for automating administrative tasks like entering grades into a document or generating quiz questions, freeing up time for meaningful interaction.

The goal should not be to eliminate AI from the classroom, but to teach students how to use it well. Educators can design tasks where students use AI as a starting point—then evaluate, revise, and add their own critical insights.

While ethical concerns about AI use (e.g., data privacy, hallucinated facts, overreliance) are real and deserve attention, they are best addressed by guidelines, transparency, and user education, not fear-driven restrictions.

Conclusion

Artificial Intelligence is not going away—nor should it. Like calculators, search engines, and the internet before it, AI is a tool that reflects the capabilities and curiosities of its users. The challenge before us is to ensure that students and educators don’t just use it, but understand it, question it, and learn alongside it.

Education systems must evolve to match this reality. That means updating how we assess students, promoting AI literacy, and creating opportunities where learning can’t be outsourced. If we can shift the focus from the perfection of output to the authenticity of understanding, AI will not threaten learning—it will elevate it.

Point to ponder? Be Priyafied!

Discover more from Priyafied

Subscribe to get the latest posts sent to your email.